Sitecore Search Data Ingestion from multiple sources and Search Recommendation API

Sitecore Search is a headless, AI-driven content search solution, It provides personalized and predictive search content based on user behavior, it is developed using the same technology as Sitecore Discover, but adapted to the needs of brands that focus on content.

Sitecore Search enables our customers to connect every visitor with the right content at lightning speed, personalized to their intent and browsing behavior even on their first visit.

In order for Sitecore Search to provide necessary search results to the users it needs content to be ingested to it with the help of Source Crawler or Ingestion API.

Once we have our Search Customer Engagement Console(CEC) we can configure different sources to get content ingested in Sitecore Search.

Steps to ingest data in CEC

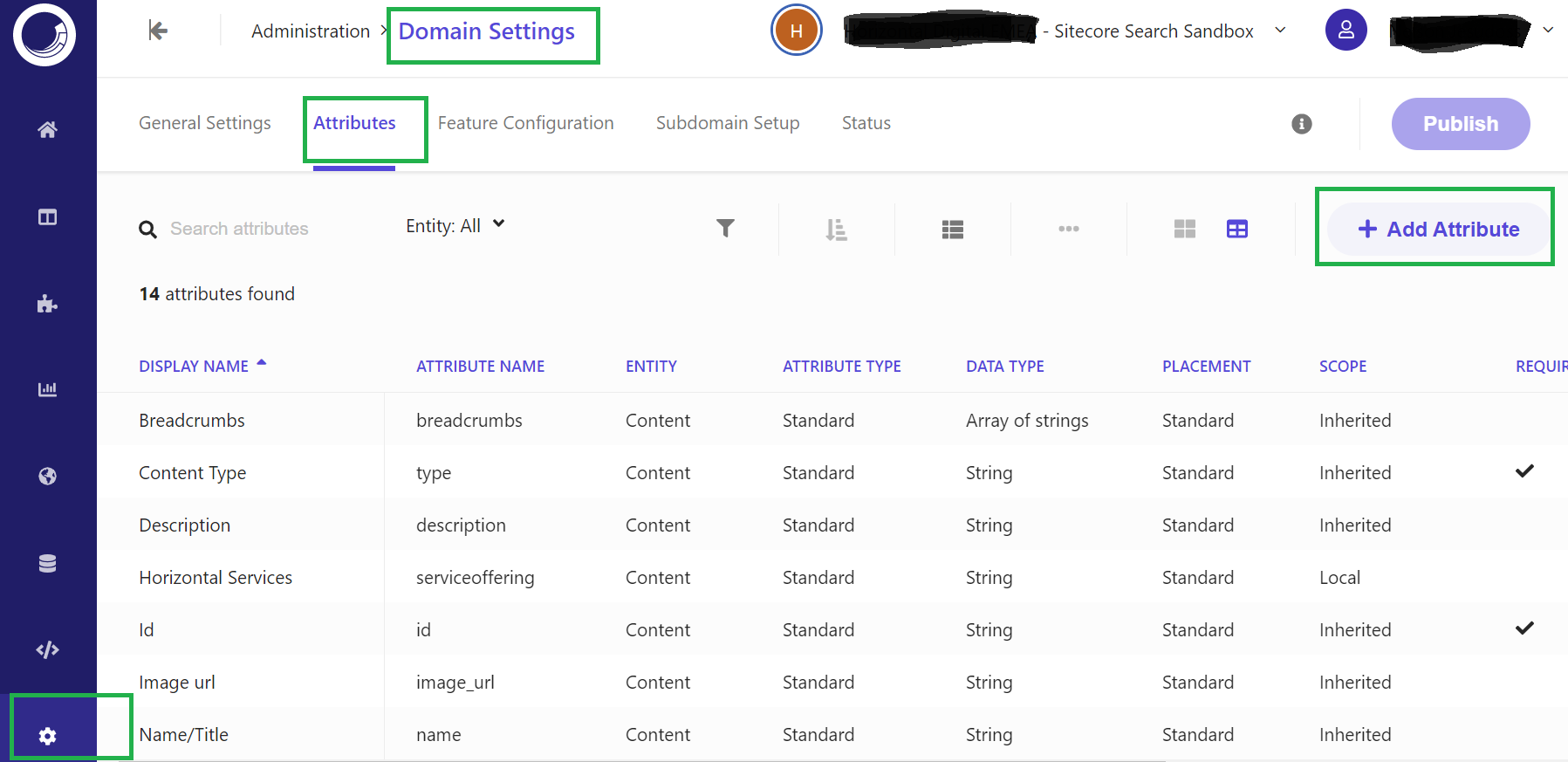

- We need to define attributes in CEC for data mapping with the metadata from Sources like web pages, documents, etc.

- Click on Administration ( Administrative tools) à Domain Settings

- After attributes are created, let us create Sources with different types of connectors based on our requirements.

- Types of connectors available to crawl the content are,

- API Crawler (Used to crawl the API / JSON)

- Push API

- Web Crawler

- Web Crawler (Advanced) - This connector is used to crawl different kinds of sources files to extract content like (Sitemap.xml, HTML, RSS files, PDF, MS Office documents, etc)

- Once the Source is created with the connector, it is then necessary for the connector to fetch the content based on the below Source settings configurations, this Source Settings configuration will be slightly different based on different types of Connectors.

- Max depth setting is important to set how deep to crawl the hyperlink.

- Authentication settings are important if we want to crawl the page which needs authentication.

- Incremental Updates settings are used to enable the incremental updates of the indexed content.

- Scan frequency settings are used to schedule the index automatically on the date time and specified duration.

- Tags definition to define tagging.

- Request Extractor is used when the Trigger does not cover all the URLs for crawling, Using this Request extractor we will provide the necessary URL to crawl for the content which is not covered by Triggers.

- Tiggers and Document Extractors are the important Source settings essential for the crawler to crawl the data from the specified settings.

- Triggers

- This is mainly used to point to the content provider and crawls the set of pages, the hyperlink in those pages, include links, exclude links, how many deep links to index, and also crawl any pages which are password protected, etc based on the defined rule.

- A trigger is the starting point that the crawler uses to look for content to index.

- Trigger looks for the content from any of the following or with a combination

- Sitemap (Crawls the content from the Sitemap.xml file)

- RSS (Crawl the content from RSS feed URL)

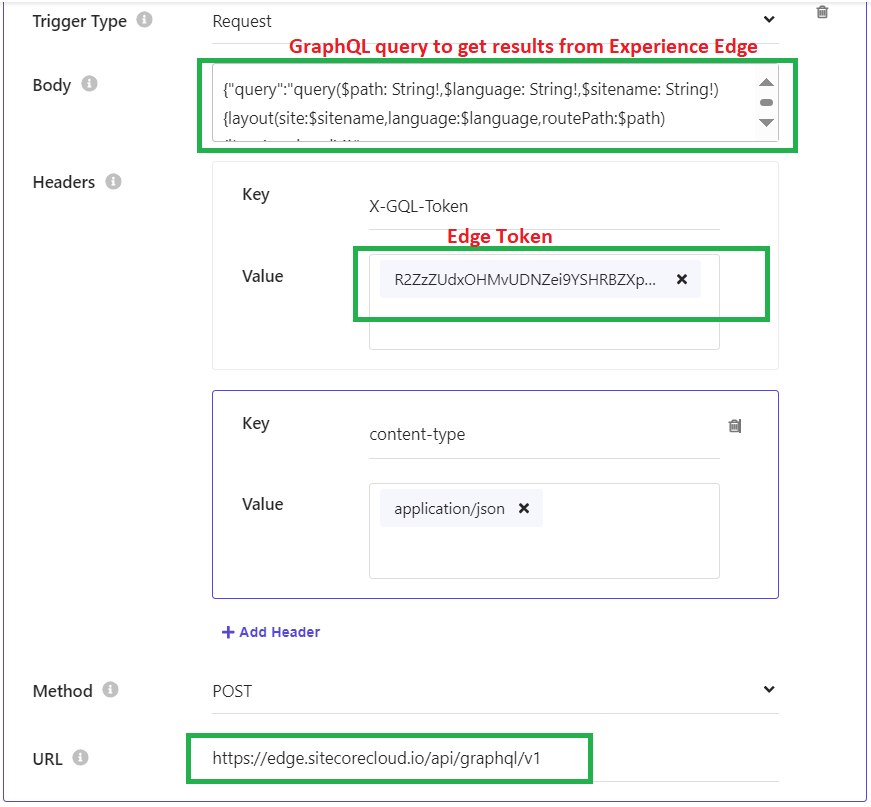

- Request(Crawls the content from the website URL / JSON endpoint / PDF URL etc)

- Javascript (This Javascript function will provide URLs to crawl for the content)

- Document Extractor

- Document Extractor extracts the content crawled from the Url provided by the Triggers.

- It helps to filter the URLs provided and crawls only the content from the URL which meets the rule instead of crawling all the hyperlinks.

- There are different ways to restrict the URLs to match the rule for crawling

- Glob Expression

- Regex Expression

- JS

- Content extraction is done by different Extraction Type with the help of Taggers

- CSS (Extract from DOM)

- XPath(Extract the content from DOM based on XPath query)

- JS( Content extraction is achieved using Cheerio syntax and must return an array of objects mapped with the attributes defined)

- Extracted content mapped to the attributes(url, type, name, etc) that we defined earlier to get indexed.

- Once all configuration is done then Publish the Source for scanning and indexing.

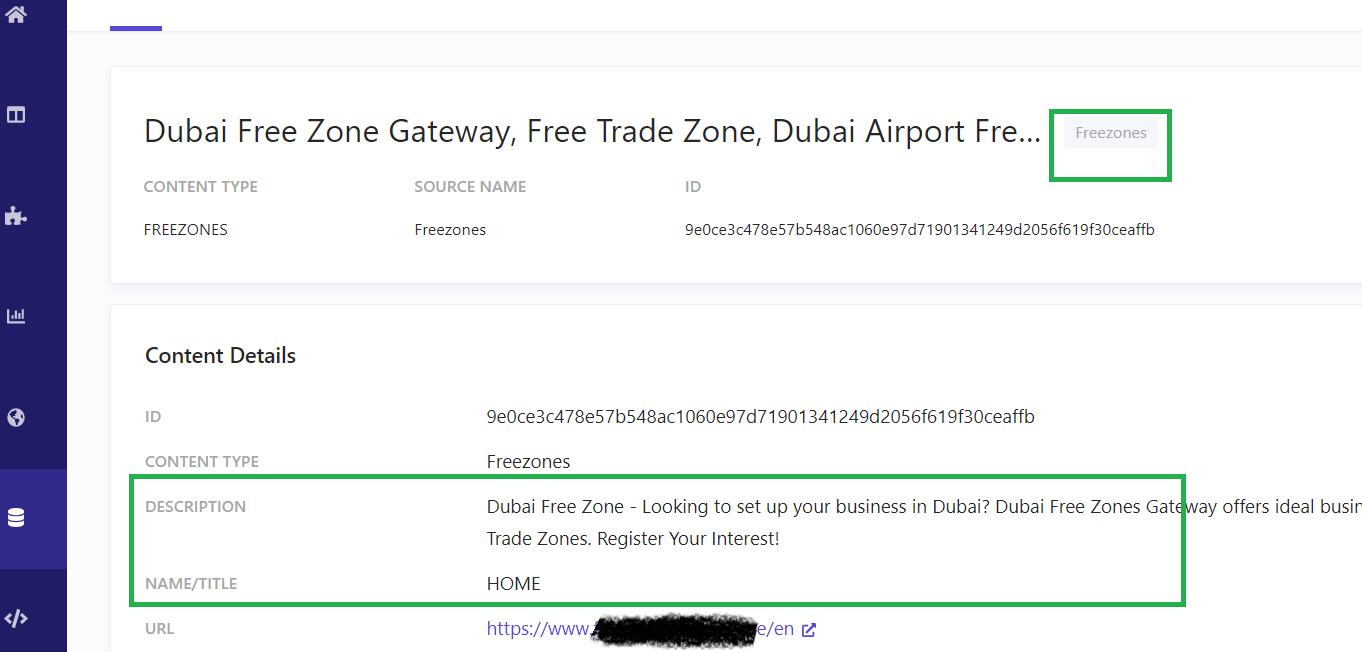

- All the indexed content will be available in the Catalog section.

- Ingesting Different data types:

- Sitemap

- Create Source using Web Crawler connector

- Update the Web Crawler Settings with Sitemap.xml file URL and MAX DEPTH to 0, because the sitemap will contain all the URLs necessary for crawling from the <loc> node, there is no need for MAX DEPTH

- Increase the Timeout settings

- Attribute Extraction will provide only an XPath /Meta Tag option to extract data without JS and CSS.

- Create the XPath expression for the data on all the pages so that the crawler going to extract the content without failure. usually, use the View page source and identify the DOM content such as metadata, different HTML nodes, etc which we need to extract across all the pages.

- RSS

- Create source using Web Crawler (Advanced) connector

- Update the Web Crawler Settings with RSS file URL and MAX DEPTH to 0, because the RSS will contain all the URLs necessary for crawling from the <link> node, there is no need for MAX DEPTH

- Increase the Timeout settings

- Create the Trigger with the Tigger Type to RSS and set URLs to RSS feed URL

- Document Extractor will provide an option to extract content using XPath, JS, and CSS.

- Select Extractor Type as JS

- Usually, use the View page source and identify the DOM content such as metadata, different HTML nodes, etc which we need to extract across all the pages.

- WORD

- Create source using Web Crawler (Advanced) connector

- Update the Web Crawler Settings with the MAX DEPTH to 0.

- Increase the Timeout settings

- Create the Trigger with the Tigger Type to Request and set URLs to Word document URL

- Document Extractor will provide an option to extract content using XPath, JS, and CSS.

- Select Extractor Type as JS

- Usually, use the Word document's headings, bullet list, and table options, etc, based on that update the expression accordingly to crawl the content.

- Create source using Web Crawler (Advanced) connector

- Update the Web Crawler Settings with MAX DEPTH to 0.

- Increase the Timeout settings

- Create the Trigger with the Tigger Type to Request and set URLs to RSS feed URL

- Document Extractor will provide an option to extract content using XPath, JS, and CSS.

- Select Extractor Type as JS

- I have used online PDF to HTML Convertor to find the DOM of the PDF, and based on that I used Cheerio function to extract the content from the PDF

- HTML

- Create source using Web Crawler (Advanced) connector

- Update the Web Crawler Settings MAX DEPTH to the required deep level.

- Increase the Timeout settings

- Create the Trigger with the Tigger Type to Request for crawling website URL.

- Document Extractor will provide an option to extract content using XPath, JS, and CSS.

- Set Extractor Type as Xpath or based on our requirements select JS or CSS.

- JSON

- Create source using API Crawler connector

- Update the Web Crawler Settings MAX DEPTH to 0

- Increase the Timeout settings

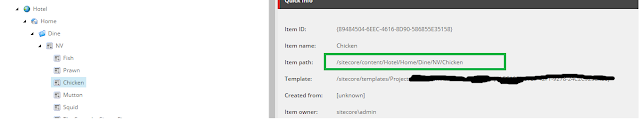

- Create the Trigger with the Tigger Type to Request for crawling website URL.

- Document Extractor will provide an option to extract content using JS and JSONPath.

- Select Extractor Type as JS.

- Sources

- API

- There are different types of API available for Pushing content for indexing, for applications to search the results from Sitecore Search, and to tracking the user action.

- API Key is important to access the endpoints for pushing /searching and capturing events.

- Sitecore Representative will be setting up these API keys during Sitecore Search onboarding.

- Ingestion API

- This API is used to push the content to Search Search using the Source connector Push API, I will provide more information on this on another blog.

- Search and Recommendation API

- Users are able to search for the content from the website using Sitecore Search using the following API.

- In order to do a search we need to create a Search widget on the Widgets tab and query for content on that widget.

- Use HTTP POST to trigger the search endpoint with the Domain ID, with the access token got from Authentication API

- Event API

- This API is used to track the user's actions such as

- Visits

- Click actions etc

- Endpoint used for tracking user events

- https://api-........com/event/{key}/v4/publish

Comments

Post a Comment